Search results

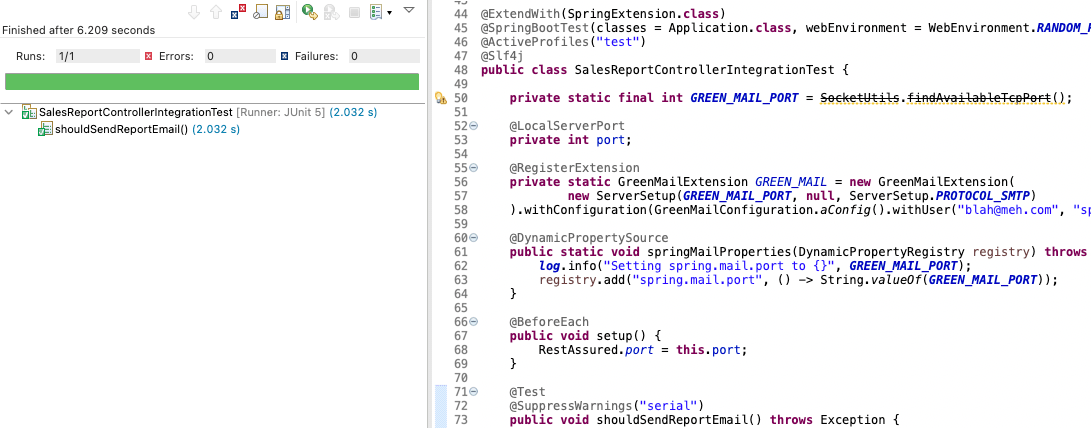

Writing integration tests with GreenMail and Jsoup for Spring Boot applications that send Emails

1. OVERVIEW

Your organization implemented and deployed Spring Boot applications to send emails from Thymeleaf templates.

Let’s say they include reports with confidential content, intellectual property, or sensitive data. Is your organization testing these emails?

How would you verify these emails are being sent to the expected recipients?

How would you assert these emails include the expected data, company logo, and/or file attachments?

This blog post shows you how to write integration tests with GreenMail and Jsoup for Spring Boot applications that send emails.

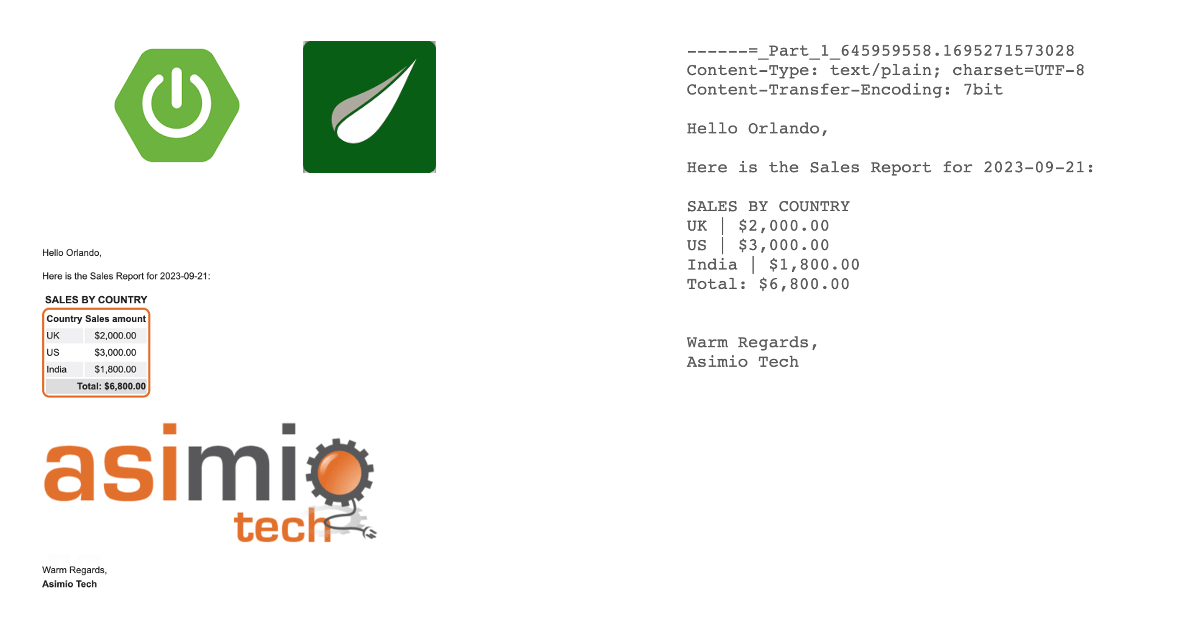

Sending Emails with Spring Boot and Thymeleaf

1. OVERVIEW

Your team used Java and Spring Boot to implement Logistics functionality, or a Hotel reservation system, or an e-commerce Shopping Cart, you name it.

Now Business folks would like to receive emails with daily shipment reports, or weekly bookings, or abandoned shopping carts.

Business people would like to get emails with consolidated data to take decisions based on these reports.

Spring Framework, specifically Spring Boot includes seamless integration with template engines to send HTML and/or text emails.

This blog post covers how to send HTML and text emails using Spring Boot and Thymeleaf template engine.

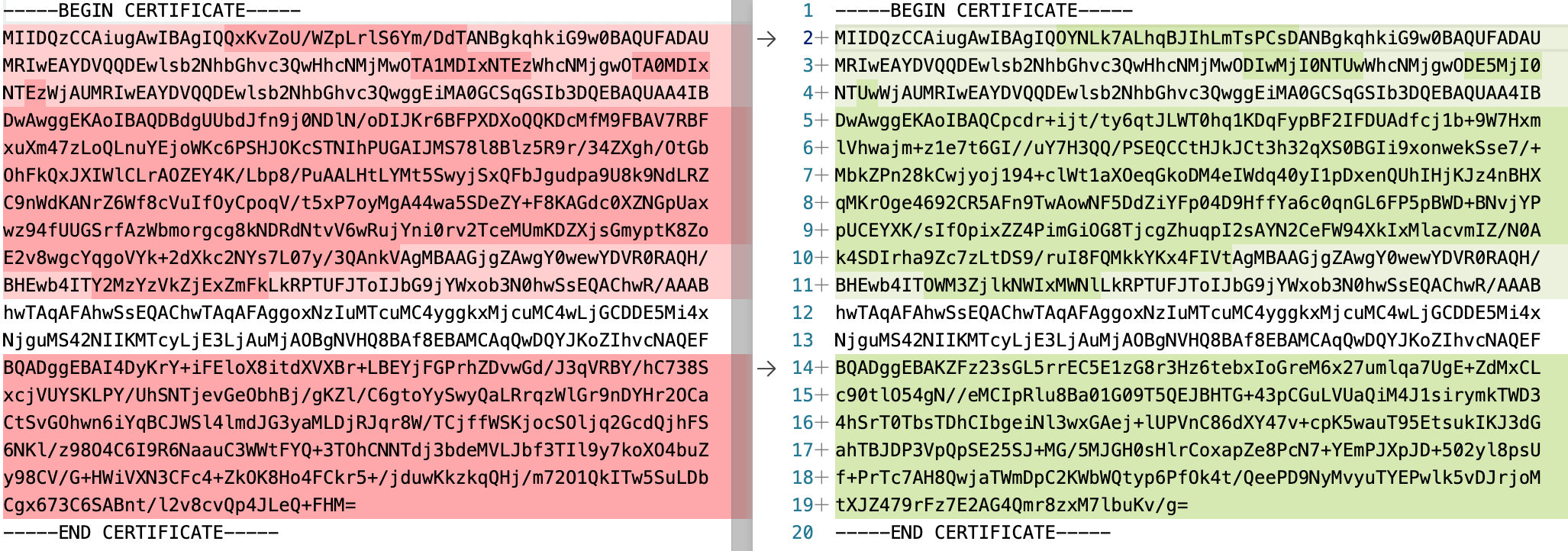

Extract Certificate and add it to a Truststore Programmatically

1. OVERVIEW

A few days ago I was working on the accompanying source code for:

-

Seeding Cosmos DB Data to run Spring Boot 2 Integration Tests

-

Writing dynamic Cosmos DB queries using Spring Data Cosmos repositories and ReactiveCosmosTemplate

blog posts, and ran into the same issue a few times.

The previously running azure-cosmos-emulator Docker container wouldn’t start.

I had to run a new azure-cosmos-emulator container, but every time I ran a new container, the emulator’s PEM-encoded certificate changed. That meant the Spring Boot application failed to start because it couldn’t connect to the Cosmos DB anymore. The SSL handshake failed.

A manual solution involved running a bash script that deletes the invalid certificate from the TrustStore, and adds a new one generated during the new Docker container start-up process.

That would be fine if you only need to use this approach once or two during the development phase.

But this manual approach won’t work for running Integration Tests as part of your CI/CD pipeline, regardless if an azure-cosmos-emulator container is already running, or if you rely on Testcontainers to run a new one.

This blog post covers how to programmatically extract an SSL certificate from a secure connection and add it to a TrustStore that you can use in your integration tests, for instance.

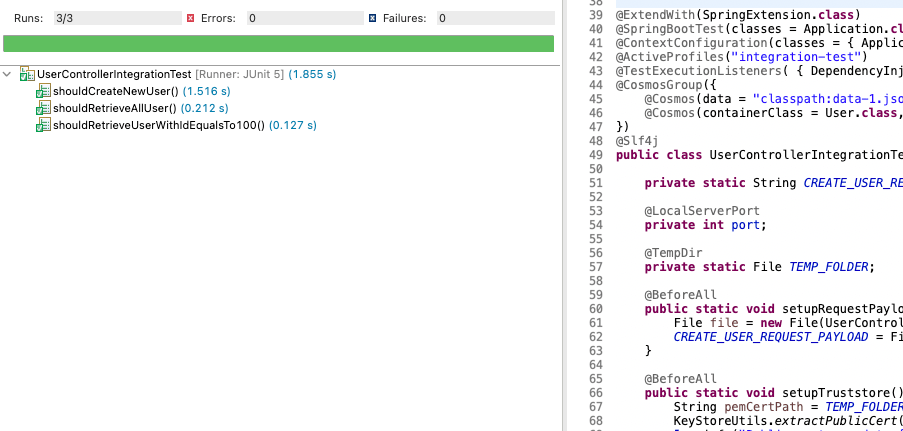

Seeding Cosmos DB Data to run Spring Boot 2 Integration Tests

1. OVERVIEW

Let’s say you are deploying your Spring Boot RESTful applications to Azure.

Some of these Spring Boot applications might have been modernization rewrites to use Cosmos DB instead of relational databases.

You might have even added support to write dynamic Cosmos DB queries using Spring Data Cosmos. And let’s also assume you wrote unit tests for REST controllers, business logic, and utility classes.

Now you need to write integration tests to verify the interaction between different parts of the system work well, including retrieving from, and storing to, a NoSQL database like Cosmos DB.

This blog post shows you how to write a custom Spring TestExecutionListener to seed data in a Cosmos database container. Each Spring Boot integration test will run starting from a known Cosmos container state, so that you won’t need to force the tests to run in a specific order.

Writing dynamic Cosmos DB queries using Spring Data Cosmos repositories and ReactiveCosmosTemplate

1. OVERVIEW

Let’s say you need to write a RESTful endpoint that takes a number of request parameters, and use them to filter out data from a database.

Something like:

/api/users?firstName=...&lastName=...

Some of the request parameters are optional, so you would only include query conditions in each SQL statement depending on the request parameters sent with each request.

You’ll be writing dynamic SQL queries.

I have covered different solutions when the data comes from a relational database:

- Writing dynamic SQL queries using Spring Data JPA repositories and EntityManager

- Writing dynamic SQL queries using Spring Data JPA Specification and Criteria API

- Writing dynamic SQL queries using Spring Data JPA repositories and Querydsl

But what if the data store is not a relational database? What if the data store is a NoSQL database?

More specifically, what if the database is Azure Cosmos DB?

This tutorial teaches you how to extend Spring Data Cosmos for your repositories to access the ReactiveCosmosTemplate so that you can write dynamic Cosmos DB queries.

Unit testing Spring's TransationTemplate, TransactionCallback with JUnit and Mockito

1. OVERVIEW

Let’s say you had to write a Business Service implementation using Spring TransactionTemplate instead of the @Transactional annotation.

As a quick example, you need to retrieve a film reviews from external partners using RESTful APIs.

You also need to update this film-related relational database tables rows.

And lastly, you need to publish a JMS message for interested parties to process these changes.

A made up example, but the point is that you need to write a Business Logic that involves sending RESTful API requests to external services, executing multiple SQL statements, and publishing a message to a JMS Queue.

You have already realized that the RESTful API requests and publishing a JMS message shouldn’t be part of the JDBC transaction, so you end up using TransactionTemplate, and a code snippet similar to:

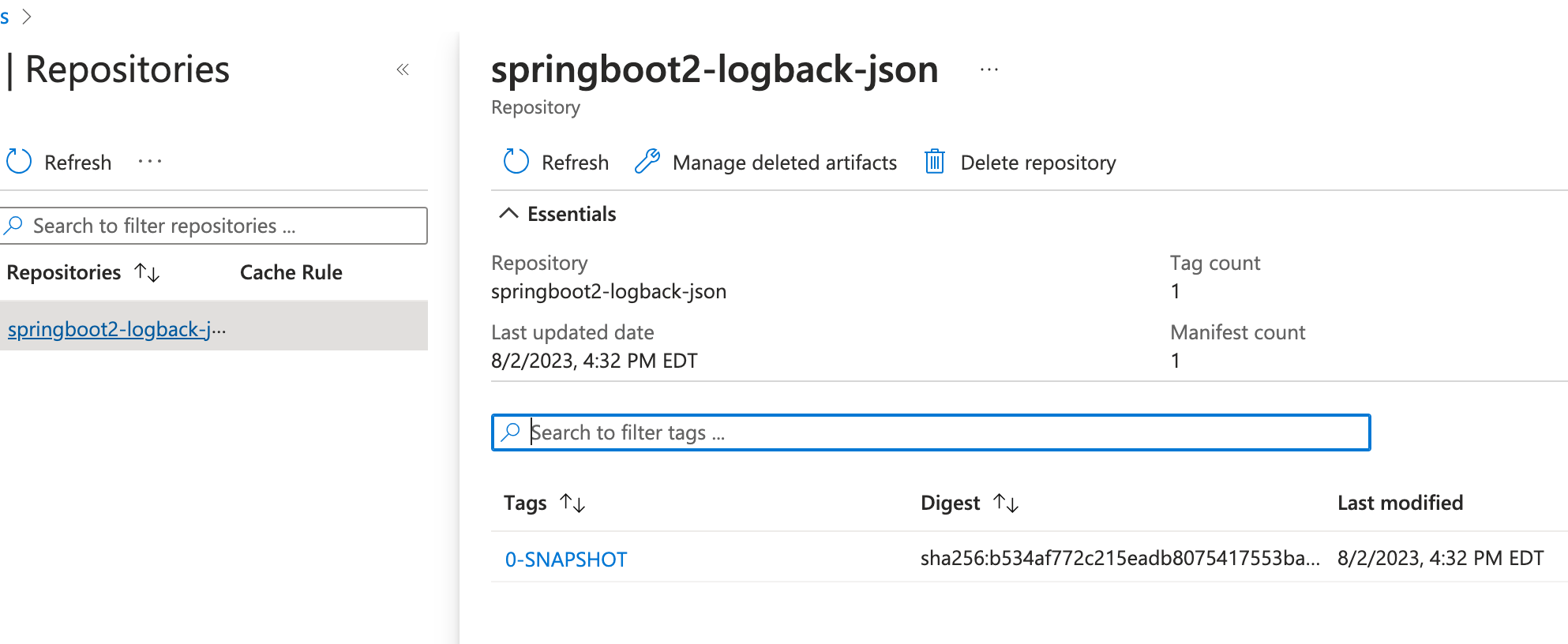

Pushing Spring Boot 2 Docker images to Microsoft ACR

1. OVERVIEW

Google’s jib-maven-plugin, Spotify’s docker-maven-plugin, and spring-boot-maven-plugin since Spring Boot 2.3 help you to build Docker images for your Spring Boot applications.

You might also want to store these Docker images in a private Docker registry.

Microsoft’s Azure Container Registry (ACR) is a cheap option to store both, public and private Docker images.

It would even makes more sense to use ACR if your organization is already invested in other Azure services.

Spring Boot application in a Docker image stored in a private Azure Container Registry

Spring Boot application in a Docker image stored in a private Azure Container Registry

This tutorial covers setting up the Azure infrastructure and Maven configuration to push a Docker image to a private ACR repository.

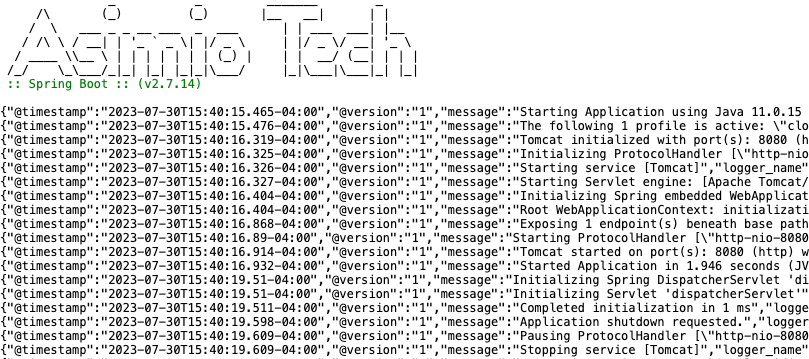

Configuring JSON-Formatted Logs in Spring Boot applications with Slf4j, Logback and Logstash

1. OVERVIEW

Logging is an important part of application development.

It helps you to troubleshoot issues, to follow execution flows, not only inside the application, but also when spawning multiple requests across different services.

Logging also helps you to capture data and replicate production bugs in a development environment.

Often times, searching logs efficiently is a daunting task. That’s why there are plenty of Log Aggregators such as Splunk, ELK, Datadog, AWS CloudWatch, and many more, that help with capturing, standardizing, and consolidating logs to assist with log indexing, analysis, and searching.

Standard-formatted log messages like:

2023-08-01 12:43:44.421 INFO 73710 --- [ main] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat started on port(s): 8080 (http) with context path ''

are easy to read by Engineers but not so easy to parse by Log Aggregators, especially if the log format keeps changing.

This blog post helps you to configure Spring Boot applications to format log messages as JSON using Slf4j, Logback and Logstash, and having them ready to be fed to Log Aggregators.

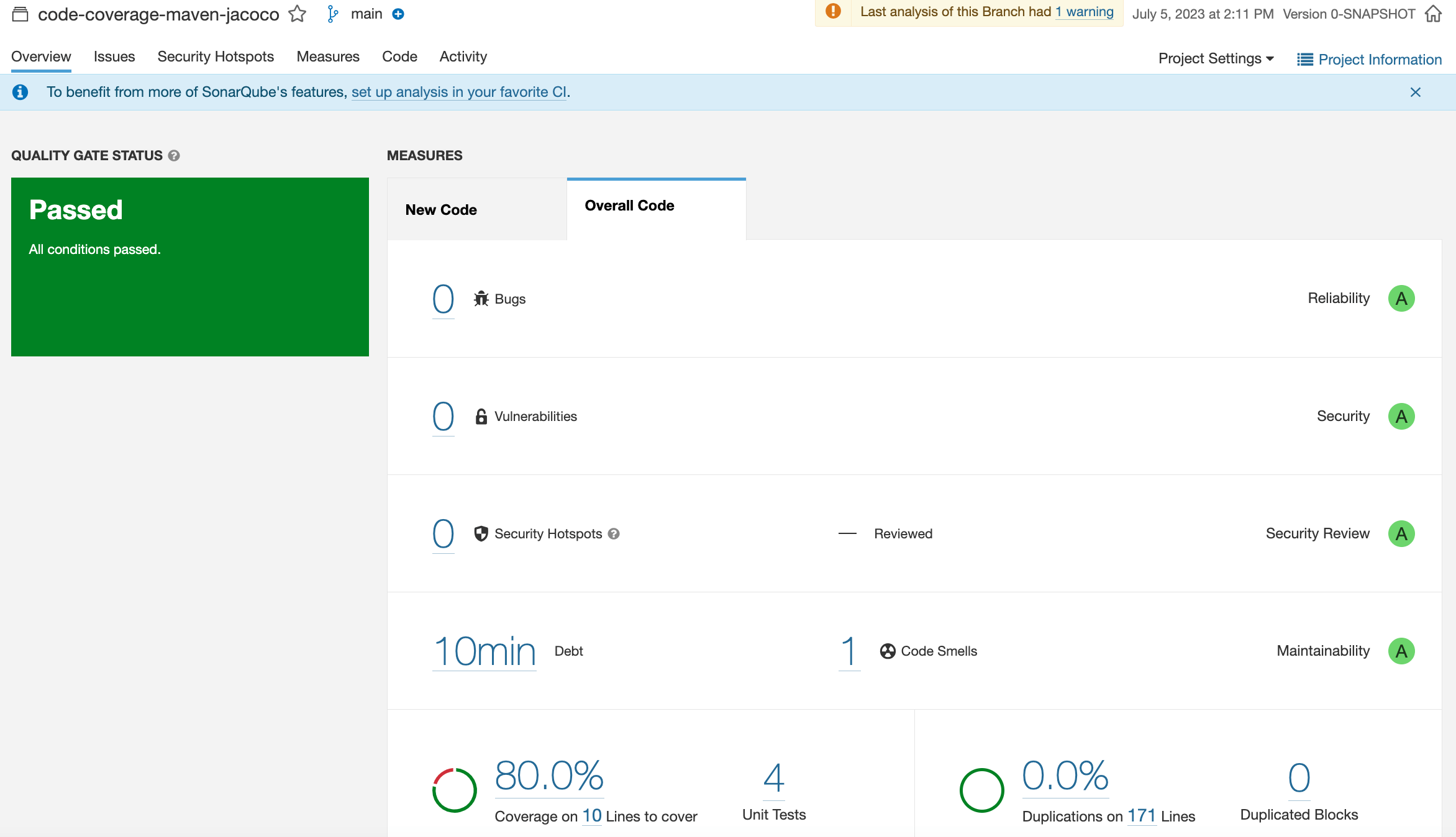

Uploading JaCoCo Code Coverage Reports to SonarQube

1. OVERVIEW

SonarQube is a widely adopted tool that collects, analyses, aggregates and reports the source code quality of your applications.

It helps teams to measure the quality of the source code as your applications mature.

SonarQube integrates with popular CI/CD tools so that you can get source code quality reports every time a new application build is triggered; helping teams to fix errors, reduce technical debt, maintain a clean source base, etc.

A previous blog post covered how to generate code coverage reports using Maven and JaCoCo.

This blog post covers how to generate JaCoCo code coverage reports and upload them to SonarQube.

Writing dynamic SQL queries using Spring Data JPA repositories, Hibernate and Querydsl

1. OVERVIEW

Let’s say you need to implement a RESTful endpoint where some or all of the request parameters are optional.

An example of such endpoint looks like:

/api/films?minRentalRate=0.5&maxRentalRate=4.99&releaseYear=2006&category=Horror&category=Action

Let’s also assume you need to retrieve the data from a relational database.

Processing these requests will translate to dynamic SQL queries, helping you to avoid writing a specific repository method for each use case. This would be error-prone and doesn’t scale as the number of request parameters increases.

In addition to:

-

Writing dynamic SQL queries using Spring Data JPA Specification and Criteria

-

Writing dynamic SQL queries using Spring Data JPA repositories and EntityManager

you could also write dynamic queries using Spring Data JPA and Querydsl.

Querydsl is a framework that helps writing type-safe queries on top of JPA and other backend technologies, using a fluent API.

Spring Data JPA provides support for your repositories to use Querydsl via the QuerydslJpaPredicateExecutor fragment.

These are some of the methods this repository fragment provides:

| findOne(Predicate predicate) |

| findAll(Predicate predicate) |

| findAll(Predicate predicate, Pageable pageable) |

and more.

You can combine multiple Querydsl Predicates, which generates dynamic WHERE clause conditions.

But I didn’t find support to generate a dynamic number of JOIN clauses. Adding unneeded JOIN clauses to your SQL queries will impact the performance of your Spring Boot application or database.

This blog post covers how to extend Spring Data JPA for your repositories to access Querydsl objects so that you can write dynamic SQL queries.